audioflux.chroma_cqt

- audioflux.chroma_cqt(X, chroma_num=12, num=84, samplate=32000, low_fre=32.70319566257483, bin_per_octave=12, factor=1.0, thresh=0.01, window_type=WindowType.HANN, slide_length=None, normal_type=SpectralFilterBankNormalType.AREA, is_scale=True)

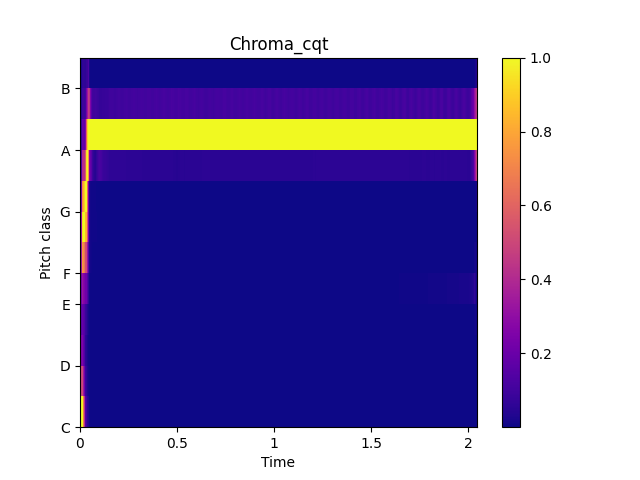

Constant-Q chromagram

Note

We recommend using the CQT class, you can use it more flexibly and efficiently.

- Parameters

- X: np.ndarray [shape=(…, n)]

audio time series.

- chroma_num: int

Number of chroma bins to generate.

- num: int

Number of frequency bins to generate, starting at low_fre.

Usually:

num = octave * bin_per_octave, default: 84 (7 * 12)- samplate: int:

Sampling rate of the incoming audio.

- low_fre: float

Lowest frequency. default: 32.703(C1)

- bin_per_octave: int

Number of bins per octave.

- factor: float

Factor value

- thresh: float

Thresh value

- window_type: WindowType

Window type for each frame.

See:

type.WindowType- slide_length: int

Window sliding length.

- normal_type: SpectralFilterBankNormalType

Spectral filter normal type. It determines the type of normalization.

- is_scale: bool

Whether to use scale.

- Returns

- out: np.ndarray [shape=(…, chroma_num, time)]

The matrix of CHROMA_CQT

See also

Examples

Read 220Hz audio data

>>> import audioflux as af >>> audio_path = af.utils.sample_path('220') >>> audio_arr, sr = af.read(audio_path)

Extract chroma_cqt data

>>> chroma_arr = af.chroma_cqt(audio_arr, samplate=sr)

Show spectrogram plot

>>> import matplotlib.pyplot as plt >>> from audioflux.display import fill_spec >>> import numpy as np >>> >>> # calculate x-coords >>> audio_len = audio_arr.shape[-1] >>> x_coords = np.linspace(0, audio_len/sr, chroma_arr.shape[-1] + 1) >>> >>> fig, ax = plt.subplots() >>> img = fill_spec(chroma_arr, axes=ax, >>> x_coords=x_coords, >>> x_axis='time', y_axis='chroma', >>> title='Chroma_cqt') >>> fig.colorbar(img, ax=ax)